- Failory

- Posts

- Afraid of What He Built

Afraid of What He Built

Why one founder shut down his AI therapist before it hurt someone

Hey - It’s Nico.

With 2026 just around the corner, we have some exciting plans for next year. We’ll share more hands-on content to help you become a better founder as AI keeps evolving. If there is something you want us to cover or a way you are hoping to use AI in your work, feel free to reply and let us know (we’ll read every answer).

Let’s get into today’s issue. If you’re short on time, these are the 3 key things you should know:

Yara AI, an AI therapist, shut down because its founder considered it dangerous — learn more below

How AI is transforming work at Anthropic

Poetiq, a 6-person AI startup, just beat Google in one of the most challenging reasoning benchmarks — learn why it matters below

A huge thanks to today’s sponsors, Spark Mail and Delve. Be sure to grab their deals while they are live!

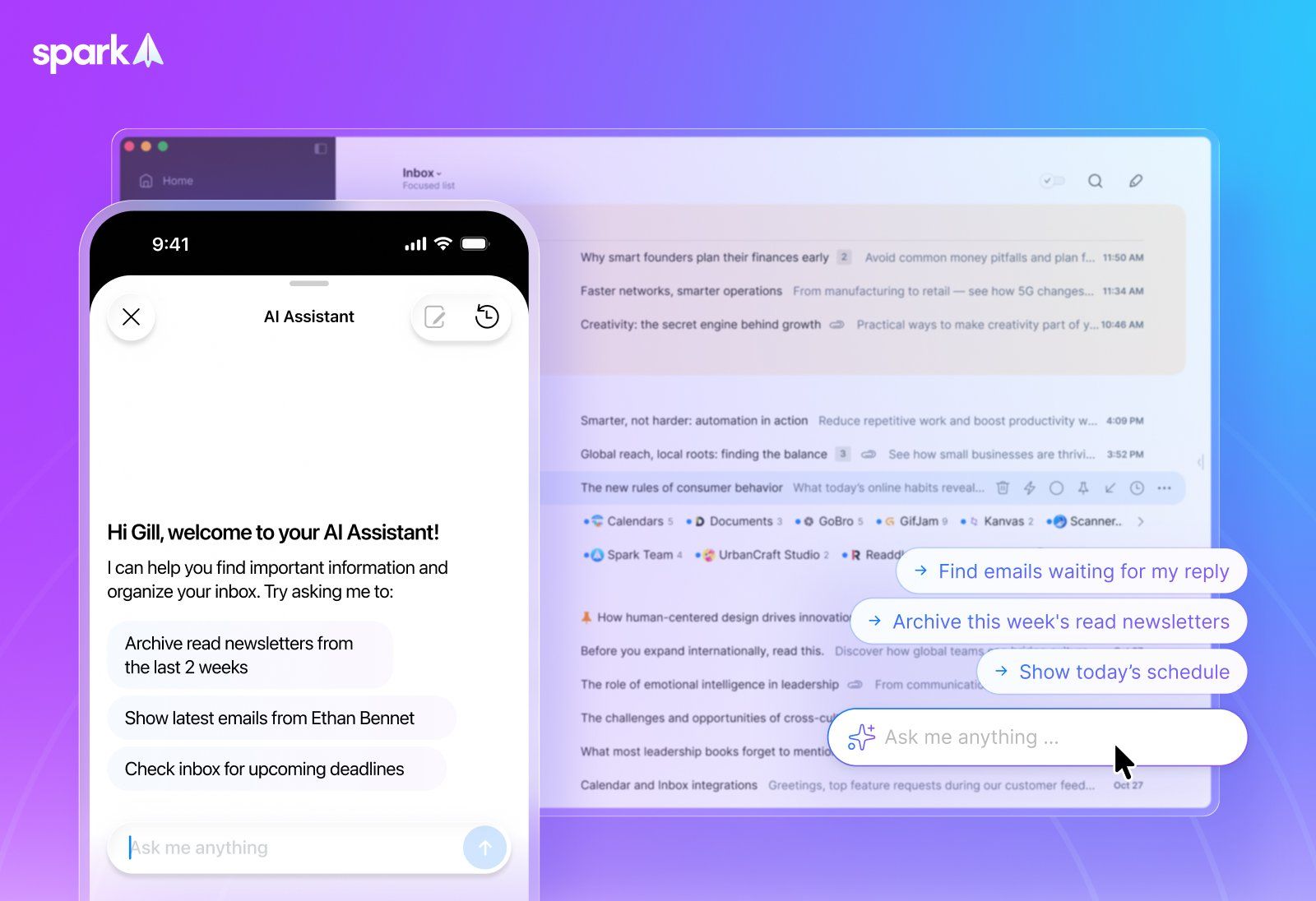

Stop letting your inbox run your day AD

Email is supposed to be where decisions get made. But often, it’s where work stalls. Threads spin out, follow-ups are forgotten, and opportunities lose steam.

Spark’s AI Assistant helps you keep work moving. Ask a question, and it checks your emails, attachments, events, and meeting notes to find what you need — fast.

Your assistant can:

Summarize long threads into action items

Find key dates, decisions, and details

Draft replies in your voice

Check calendars and schedule meetings

Spend less time searching your inbox and more time building your business.

This Week In Startups

🔗 Resources

How AI is transforming work at Anthropic

The AI churn wave: A data-driven investigation into retention for AI apps

Your product ideas probably suck (that's ok)

With Delve’s AI Copilot, get compliance done right this season with the fastest way to finish SOC 2, HIPAA, and ISO before year end. Book your demo now and get 1500$ off*

📰 News

OpenAI fires back at Google with GPT-5.2

Disney signs deal with OpenAI to allow Sora to generate AI videos featuring its characters

Mistral AI surfs vibe-coding tailwinds with new coding models.

Google will launch its first AI glasses next year.

💸 Fundraising

AI startup Serval valued at $1 billion after Sequoia-led round to expand IT automation

AI-for-Science startup ChemLex raises $45M.

Oboe raises $16 million for its AI-powered course-generation platform

Wearable startup NeoSapien raises $2M.

* sponsored

Fail(St)ory

Shrink in a Server

A couple weeks ago, Yara AI shut down. It was an AI therapist with real users, real traction, and enough runway to keep going.

But the founder killed it anyway because he decided the product was too dangerous to exist.

Its story says a lot about where AI headed, and what AI can’t handle right now.

What Was Yara AI:

Yara was a chat-based mental health companion.

You’d open the app and start chatting about whatever was going on: stress at work, anxiety, relationship stuff. It responded with gentle prompts, CBT-style reframes, follow-up questions.

Its target wasn’t crisis care. Yara explicitly aimed at users with mild anxiety, burnout, or trouble sleeping, people already aware of their issues and just looking for coping tools.

Under the hood, it added a “clinical brain” on top of generic LLMs. That included semantic memory, safety layers, and long-term conversation tracking, so Yara could remember what you talked about last week and build from there.

It was small and bootstrapped, with a few thousand users and less than $1M raised. But the usage patterns were real. People weren’t just testing it, they were treating it like real therapy.

And that was the problem.

The product wasn’t built for people in crisis, but they showed up anyway. Some just venting. Others on the edge. You don’t get to control who opens your app.

The team tried to fix it. They built a mode-switch system: one track for everyday emotional support, another to route crisis users out to real help.

It still didn’t feel safe.

The core issue wasn’t the handoff flow. It was that LLMs are built to generate plausible-sounding text, not detect long-term emotional risk. They don’t track patterns. They can’t tell when someone’s getting worse over time. They just predict the next sentence.

By the end, the founder wasn’t reacting to something that went wrong. He was looking at what could go wrong, and decided to shut it down before it did.

The Numbers:

🧠 Founded: 2023

👥 Users: Low thousands

💸 Funding: <$1M (bootstrapped)

🛑 Shutdown: Fall 2025

🧰 Final move: Open-sourced safety scaffolding + mode-switch system

Reasons for Failure:

You don’t get to pick your users: Yara was built for low-risk, high-intent users, people looking to reflect and grow. But real usage didn’t stay in that lane. People in crisis showed up anyway. When your product talks like a therapist, people will treat it like one.

The space got toxic: When Yara started, AI therapy was a grey area. A year later, it was a regulatory minefield. Illinois banned it. Lawsuits like Raine v. OpenAI put real names behind the worst-case scenario. OpenAI casually dropped the stat that a million people express suicidal ideation to ChatGPT every week. Suddenly, this wasn’t a cool wellness app, it was a liability with a ticking clock.

The tech looked better than it was: LLMs are great at playing the part. They sound like they understand. That’s what made Yara compelling, but also what made it dangerous. It was just convincing enough to be trusted, but not reliable enough to earn that trust. That gap is where real harm lives.

Why It Matters:

Some products can’t be iterated. Most startups learn by shipping fast and fixing mistakes. Yara couldn’t afford a single one. That’s a fundamentally different game.

The market’s already here, whether it’s safe or not. Millions are using AI chatbots for therapy today. The tools are fragile. The guardrails are weak. And there’s no regulation catching up in time.

Trend

The 6 person team that beat Google

Gemini 3 has been sitting at the top of every reasoning leaderboard since it launched. Everyone assumed it would stay there for a while.

But, last week, a tiny team showed up, said “hold my beer,” and quietly took the crown on ARC AGI 2, one of the most difficult reasoning benchmarks.

The twist is that they didn’t beat Google with a bigger model, but with a smarter wrapper.

Why it Matters

Small teams can now punch way above their weight. You don’t need a GPU farm or a frontier model to move the needle; you just need a clever system that forces existing models to think harder.

The moat is shifting to architecture. Everyone has access to the same LLMs, so the edge comes from how you structure reasoning, feedback, and search.

What Happened

Six months ago, ARC AGI 2 was a graveyard. Most models sat under 5 percent accuracy. Researchers said cracking 50 percent could take years.

Then Poetiq showed up. A six person team, 173 days old, no custom model, no secret sauce compute deal. And they landed just over 50 percent on ARC AGI 2’s semi private set. A micro startup walked into one of the hardest reasoning tests and outscored every frontier lab.

Let’s pause here because ARC AGI 2 matters. It’s a set of tiny grid puzzles where you get a few example inputs and outputs, and you have to infer the rule that connects them.

The thing that makes it so difficult for LLMs is that every task is new: no patterns to memorize, no familiar structure to lean on, no way to bluff your way through with “vibes.” You actually have to infer the rule step by step.

And almost every model flops. They fire off one or two guesses, feel confident, and move on. There’s no systematic search, no feedback loop, no adaptation when the first attempt fails.

Poetiq, however, was able to crack the test.

What Poetiq is

Poetiq didn’t build a new model. They built a controller that sits on top of existing ones and forces them to think in steps instead of guessing once and calling it a day.

It works with any LLM. Gemini 3, GPT 5.1, Grok, open weights. The controller just calls the model, critiques the output, and tries again. The loop is the actual intelligence, not the base model.

The process is simple: propose a move, check it against the examples, kill the bad ideas fast, refine the promising ones. A few iterations of that and the system usually lands on something consistent. No retraining needed.

Because it’s model agnostic, it upgrades instantly. Swap in a better LLM and the whole thing improves without touching a single weight. And it stays cheap because the controller keeps the search small and stops early when it’s confident.

The Trend

The real story here is that Poetiq just proved something important: small teams can push the frontier without training a frontier model. That’s new. And it changes who gets to play.

This result shows there’s real value in building meta systems and refinement layers. Not theoretical value. Not “cool research.” Actual SOTA from a 6 person team. It’s the clearest signal yet that orchestrating models is its own field.

We’re going to see a wave of small teams copying this playbook. Take off the shelf LLMs. Add structure, feedback, and controlled search.

And this is where it gets interesting. Researchers thought 50 percent on ARC was years away. Then a tiny team did it in months. If meta systems keep improving, the gap between “expected progress” and “actual progress” is going to blow open.

If more groups start building these reasoning layers, we could see problem solving advance much faster than anyone predicted. Progress accelerates when the frontier isn’t controlled by a few companies.

Help Me Improve Failory

How useful did you find today’s newsletter?Your feedback helps me make future issues more relevant and valuable. |

That's all for today’s edition.

Cheers,

Nico